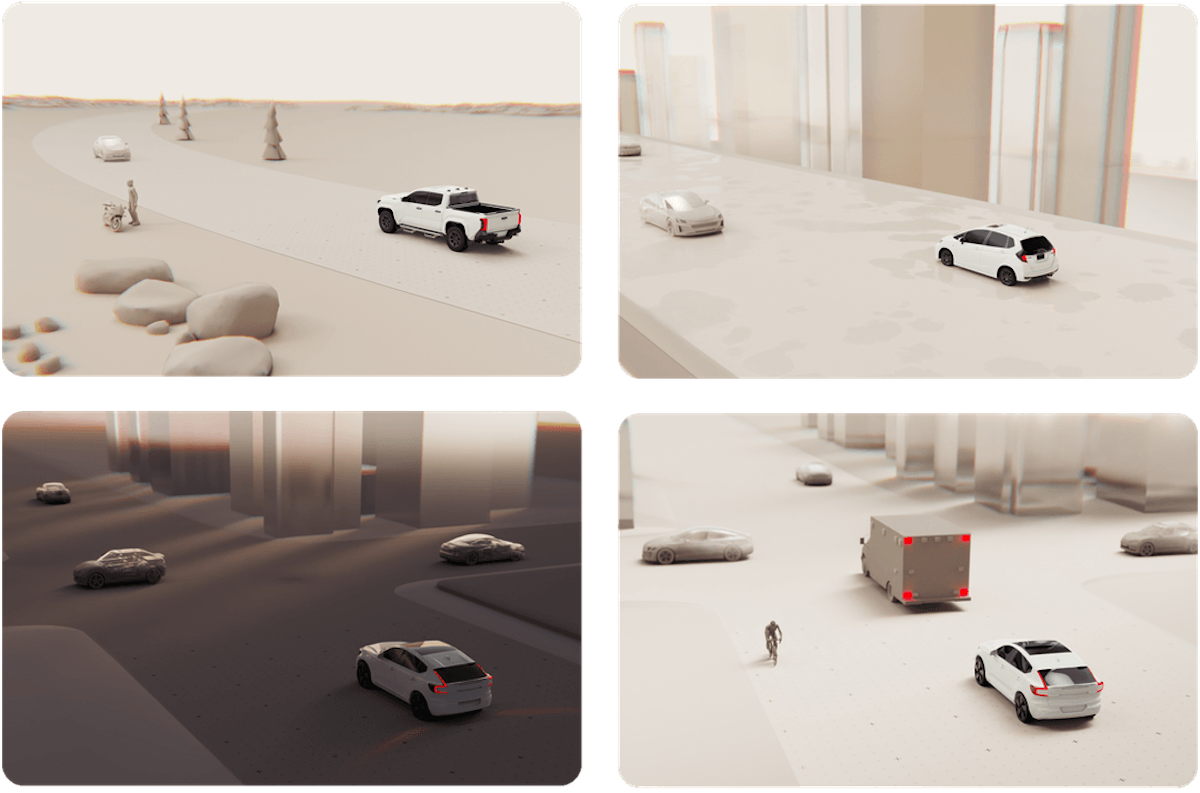

The core of Helm.ai and Honda’s collaboration lies in the “End-to-End E2E AI architecture,” which enables AI to learn driving behavior directly from raw sensor inputs—such as images and radar data—without relying on traditional intermediate layers for environment reconstruction. This design allows AI to interpret surroundings and make decisions in a manner closer to human reasoning, forming a seamless chain from “perception” to “action.” By integrating Helm.ai’s proprietary Deep Teaching™ and generative AI technologies, the system can self-evolve without the need for extensive manual data labeling, greatly surpassing conventional sensor-fusion-based architectures in both development efficiency and scalability.

Traditional autonomous driving development relies heavily on massive “labeled databases,” where human operators or semi-automated tools must assign correct annotations to sensor data to enable supervised learning. In contrast, Helm.ai’s unsupervised learning technology allows AI to independently identify features and relationships within data, discovering environmental characteristics without predefined answers. This means that Honda can train autonomous driving algorithms without deploying large fleets of test vehicles or employing vast labeling teams, significantly reducing development time and costs. Moreover, this approach enhances the adaptability of AI models, enabling them to quickly adjust to varying traffic cultures and road conditions worldwide—an essential strategic advantage for a global brand like Honda.

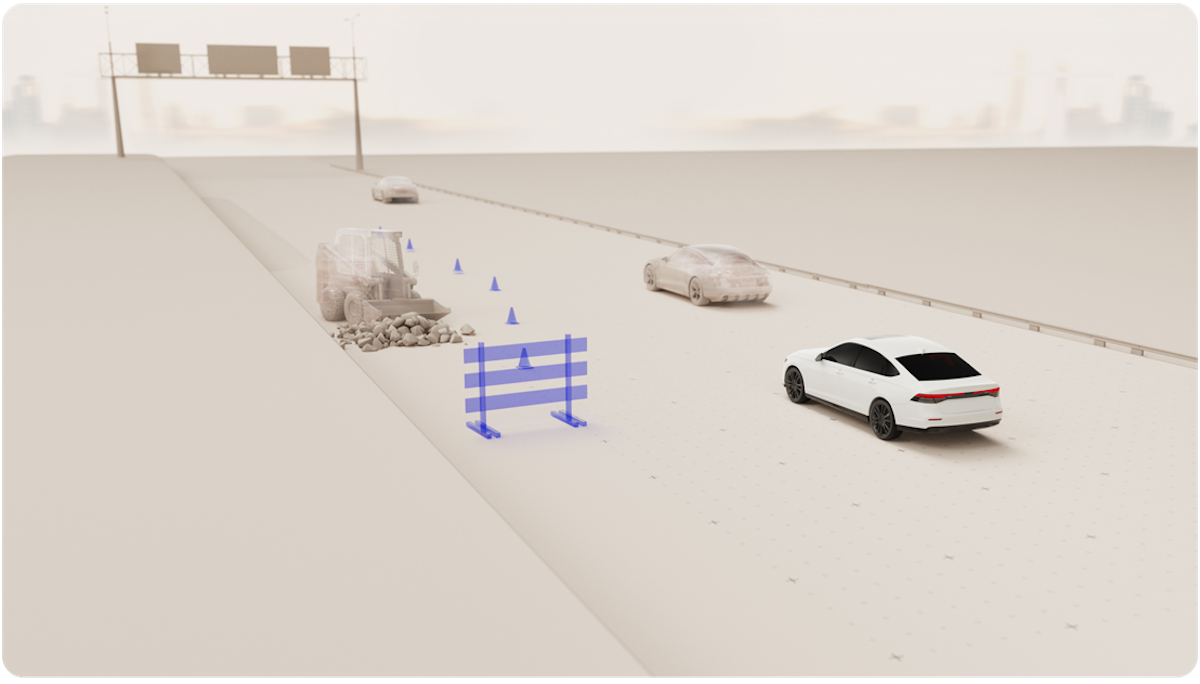

At present, leading players such as Tesla, Waymo, and Cruise follow two dominant paths: Tesla uses a vision-based E2E learning model that relies on massive real-world driving data for training, while Waymo builds its systems around multi-sensor fusion and high-definition maps to ensure redundancy and precise localization. Honda and Helm.ai’s E2E AI strategy falls somewhere in between but leans toward the philosophy of “less sensing, more intelligence.” Helm.ai’s unsupervised learning enables AI to achieve efficient learning even with fewer data and simpler hardware configurations, allowing Honda to develop lower-cost, mass-producible ADAS and semi-autonomous driving technologies.

Compared with Tesla, which depends on billions of kilometers of driving data, Honda can leverage Helm.ai’s Deep Teaching™ model to achieve comparable decision-making intelligence with far less data—while also reducing reliance on expensive LiDAR sensors and high-definition maps. This pragmatic cost structure positions Honda to deliver intelligent driver-assistance solutions that are not only advanced but also commercially competitive.

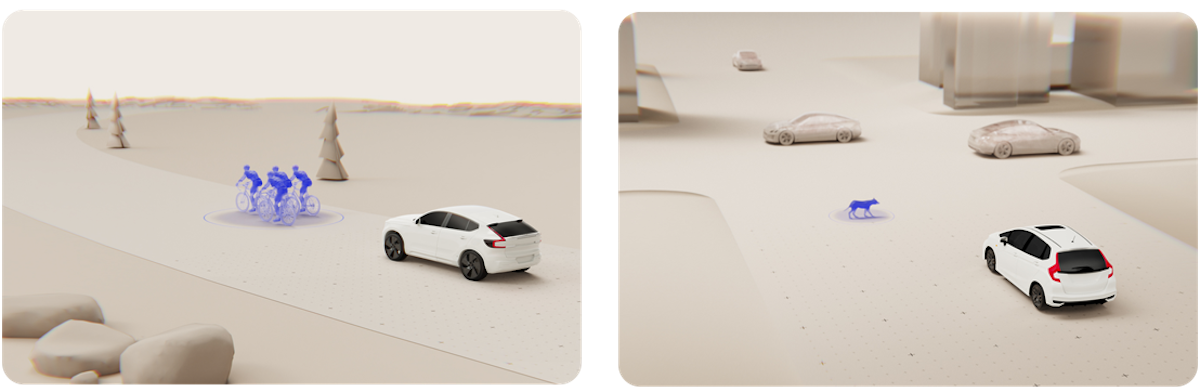

Honda plans to introduce next-generation electric and hybrid vehicles in North America and Japan around 2027, equipped with E2E AI-based driver-assistance systems developed in collaboration with Helm.ai. The system will be capable of operating across both city streets and highways, enabling automated acceleration, deceleration, steering, and lane-changing to achieve highly advanced yet natural driving assistance. This AI-centered ADAS aims to strike a balance between reliability, driving smoothness, and development cost efficiency, potentially becoming a core technology in Honda’s transition toward the Software Defined Vehicle (SDV) era.

Overall, the partnership between Honda and Helm.ai represents a strategic shift in the evolution of autonomous driving—from hardware-oriented to intelligence-oriented development. Honda seeks to replace traditional, high-cost, and complex sensor configurations with a more lightweight, self-learning AI platform capable of continuous software-based improvement. If Honda successfully commercializes this E2E architecture by 2027 as planned, it could redefine the balance between safety, efficiency, and accessibility—emerging as one of the most closely watched developments in the global automotive industry.